Super-intelligent AI software may be far off, but some threats are closer than you’d think. Drones are becoming increasingly popular for recreational use—but these machines are not toys. They are also being deployed in military and surveillance operations, and a rogue drone could cause serious damage. Most commercially-available drones are currently remote-controlled, but this could change. In 2015, the Journal of Defense Management published an article about a new AI, ALPHA, already being developed for aerial combat drones.

The system’s algorithms give it the ability to simplify problem-solving variables by considering only the most relevant data when making decisions, rather than processing all available information. This dramatically reduces processing time. It gives the AI the ability to make split-second decisions, much like a human mind—and makes it a surprisingly formidable combat opponent. The benefits are clear. In the future, AI-powered drones could take the place of human pilots in combat situations, preserving human lives. But there are drawbacks as well. For instance, drones can be hacked.

In 2015, Citrix security engineer Rahul Sasi developed a malware program called Maldrone, which allows him to hack drone navigation systems. This malware is unique in targeting the aircraft’s “autonomous decision-making systems.”

SkyJack is a drone-hacking application based on node.js. The software autonomously hacks any drones within the vicinity of the user’s drone or laptop, creating what the inventor calls an “army of zombie drones.” And anyone can download and use it.

At the 2016 RSA security conference in San Francisco, IT security researcher Nils Rodday identified serious vulnerabilities in drones used in the military and law enforcement. The issues he raised included weak, easily-hackable encryption and radio protocols between the drone’s telemetry module, the user’s device, and the drone itself. Rodday’s research suggests that government-level drones could be hacked from as far as a mile away.

QA Testing the Hero

Test-first methodologies could do a lot to ensure that the AI doesn’t outgrow human values and its own mission—even if it becomes much smarter than its programmers. In day-to-day software testing, test-driven development methods involve testing in conjunction with the early development process—rather than as a separate function and after development has been completed. In this model, the expectations and boundaries for the working software are set up front, minimizing scope creep.

An AI that gains super-intelligence this fast may eventually become smart enough to trick manual testers by showing a different set of behaviors to them than the general population. But with automated tests running through the unit level code constantly, we,would be able to expose these threats early on and force the software to automatically shut down. While we may not be able to restrict the evolution of AI, by using test-first methodologies we can create a good safety net to make sure that dangerous robots are detected and eliminated before real harm is caused.

Risk Based Testing the Protector?

On an individual level—say, with an intelligent robot used in a law enforcement environment—risk-based testing could reduce the chances of the robot responding to a threat or obstacle with unnecessary lethal force, even if it’s armed.

The mindset involves considering what could go wrong in different use cases, and the “business value” of various responses. Testers might find that there is little or no value in robots using lethal force at all or in any but the most extreme circumstances. Robots may be most useful when sent into dangerous situations where a human officer’s life would be at risk, but where they would not have to make the decision to use lethal force. Defusing a bomb is a good example; robots are already being used for this purpose.

Even in situations where robots do have the capability to use force, however, risk-based testing could help identify ways to reduce risk to innocent people. For instance, testers could evaluate the possibilities of arming the robots only with non-lethal rubber bullets or tasers, or programming the robots to only fire one shot at a time.

Risk-based testing would be useful in preventing larger-scale AI takeover scenarios as well.

Often, products malfunction because testing is viewed as a commodity. Often, the QA tester’s job is only to determine whether the software passes or fails a very limited test. For instance, a delivery robot’s software is only evaluated to determine whether it successfully delivers a package within a certain timeframe—not the risks inherent in delivery, such as running someone over in the rush to deliver on time.

‘Fairy Tale’ Testing to the Rescue?

That doesn’t mean people aren’t trying, however. At the Georgia Institute of Technology, a team of researchers have been attempting to teach human values to robots using Quixote, a teaching method relying on children’s fairy tales. Each crowd-sourced, interactive story is broken down into a flow-chart, with punishments and rewards assigned to various paths the robot can choose. The process particularly targets what the researchers call “psychotic-appearing behavior.”

The question is this: how do you test to make sure the robot is effectively learning these values? One possibility involves using a real-world testing methodology that puts the AI in increasingly complex environments and situations that challenge its training. The testers appear to be using this method already by placing the robot in a situation where it must choose between waiting in a long line to buy an item and stealing it.

Despite the movie hype—and the very legitimate concerns in the AI community—the robot apocalypse is far from inevitable. But it’s a real threat, and one we can’t afford to ignore entirely. Ultimately, progressive software development and testing methodologies that incorporate risk-based, test-first, and real-world testing methodologies may be all that stand between us and the rise of the machines.

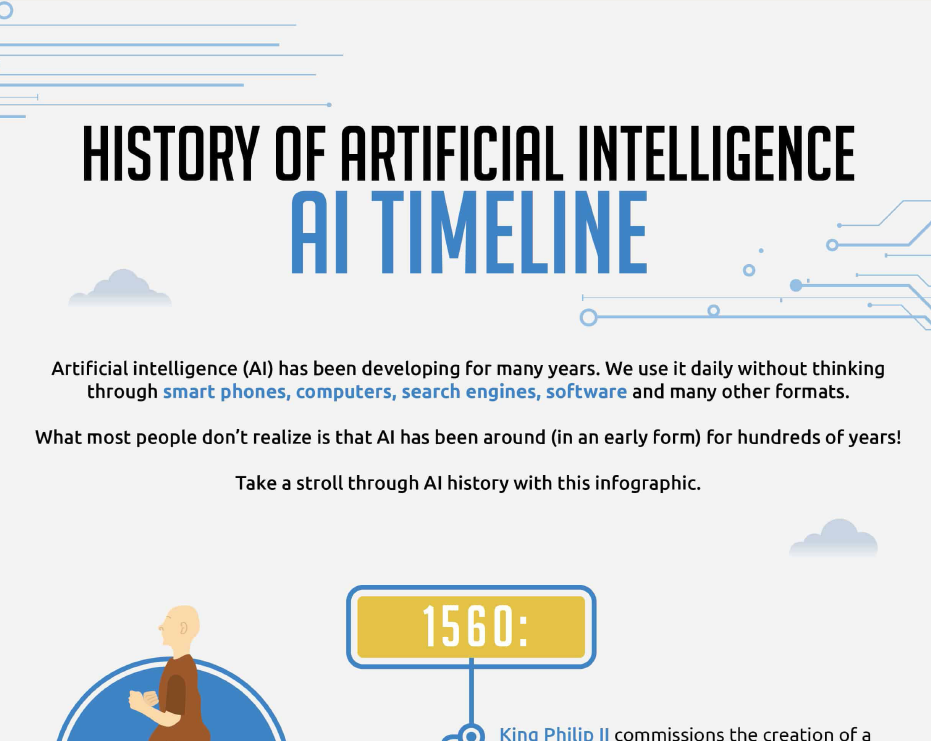

We’ll leave you with the history of AI, Artificial Intelligence. Click to expand the infographic.

The History of AI

Source: QA Symphony (republished with permission)

![A trajectory analysis that used a computational fluid dynamics approach to determine the likely position and velocity histories of the foam (Credits: NASA Ref [1] p61).](http://www.spacesafetymagazine.com/wp-content/uploads/2014/05/fluid-dynamics-trajectory-analysis-50x50.jpg)

Leave a Reply