By Dr. Kerstin Eder[1],[2], Dr. Chris Harper[1],[3], Dr. Evgeni Magid[1],[2] and Prof. Anthony Pipe[4]

“Robots are products.They should be designed using processes which assure their safety and security.” [5]

In the end of October 2013 BBC News reported about the Mars One mission. A permanent human colony with carefully selected volunteers is planned to be established on Mars in 2023. Within just five months of opening the application period 202,586 people from 140 countries applied for the one-way ticket to Mars. Out of these, 705 candidates have now been pre-selected for further testing of their physical and emotional ability. Mars One is an ambitious initiative, and robots are seen as an essential part of the colonist’s equipment. Specialized robots will explore the planet, deliver materials and goods, inspect colony facilities, perform repairs, assist in collecting samples, and perform hundreds of other tasks. Interactions with humans will take place in an informal, unstructured, and highly complex way. These robots will work near humans, be involved in shared manipulation of objects, or even make direct physical contact with their human operators as part of collaborative tasks. Learning from demonstration will enable these robots to acquire new skills “on the fly” by simply watching either humans or other robots performing tasks. This provides an intuitive way for humans to teach robots and allows for more flexible human-robot interaction than what can be achieved with task-specific pre-programmed machines.

Do You Trust Your Robot?

A Mars colony will be heavily reliant on the services of robotic assistants, so their dependability must be assured prior to their deployment. Dependability has been defined[6] as “the ability to deliver a service that can justifiably be trusted.” It is an over-arching concept that includes attributes such as safety, availability, reliability, predictability, integrity, and maintainability. Safety is a critical aspect of dependability, which assesses absence of harmful consequences of a robot’s actions (or inaction) on users and the environment. A key aspect of safety assurance is that it is a subjective condition of a system’s users as well as an objective property of the system itself. Even if a system design contains no flaws and its operation never causes harm throughout its life, if its users cannot be assured of that before they start to use it then they will not trust that system and may never use it. Thus, the art of designing dependable systems is not only to create a flawless design but to do so in a manner that permits such flawlessness to be demonstrated. This requires careful choices of a system’s architecture and mechanisms, because only those technologies whose correct operation can be easily verified and validated are suitable for such applications.

Assuring Safety in Collaborative Operations

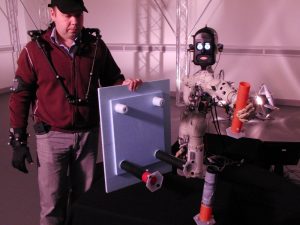

As robots are now rapidly maturing and many are ready to undergo commercialization, roboticists have become aware of their responsibility to provide safety assurance for their technology. Until recently, however, the practical deployment of robotic assistants has been held back by the lack of credible standards and techniques for safety assurance. In traditional robotic applications, as discussed in Safe and Trustworthy Autonomous Robotic Assistants, safety has been achieved by confining robots to closed workplaces from which humans are isolated. However, newer applications such as NASA’s Robonaut 2 are designed for collaborative operation with humans in shared space. Assuring safety in this context is a much more complex problem and requires more extensive assessment of the technology of the robot and also its surroundings than was necessary in more traditional applications.

Industry standards for collaborative operation in robots are currently the focus of extensive study in the major international standards agencies. The TC184 SC2 sub-committee of the International Standards Organization (ISO) develops standards for robots and robotic devices, and currently has working groups covering industrial, medical, and service robots. Working Group 7 (WG7) of the ISO Technical Committee (TC) 184, Sub-Committee (SC) 2 has developed the ISO 13482 safety requirements standard for service robots including physical assistance and mobile servant applications. Working Group 3 of the same committee is developing a Technical Specification (TS) 15066 providing guidance on collaborative operation for industrial applications, including the specification of several collaborative modes of operation and their associated safety requirements.

Central to the guidelines in TS 15066 are hazard identification and risk assessment specific to the collaborative task shared between a robotic assistant and its human operator. Thus, task identification is key to correctly determine any foreseeable hazards. Risk assessment is then performed on these hazards, including the characterization of values such as the maximum allowable speed of movement for the robot and the minimum separation distance between robot and human, either as static values or as dynamic ranges. Based on the risk assessment, risk reduction strategies can be implemented for hazards where the risk of harm is seen to be unacceptably high.

Designing for Reduced Risk

Because the traditional approach of risk reduction by separation of the human from the robot cannot be used for collaborative settings, the focus of the guidelines in TS 15066 is on influencing the design of the robot, the joint workplace and the collaborative task itself, to include protective measures that ensure the safety of human operators at all times. This may include redesign of tools and work pieces e.g. to achieve smooth, but not sharp, surfaces and low mass, both of which influence the impact force in hazardous situations caused by direct contact with humans. To continuously track the position of humans within the collaborative workplace, a speed and separation monitoring system is essential. Where hazards arise out of direct contact with human operators, whether intended or unintended, a fast contact detection system must be in place to feed into a safety-related control system. This system must be capable of processing context related information in real time and to activate protective measures when this becomes necessary.

The impact force of dynamic contact and its duration, as well as the location (body region) of such contact—all key parameters for safety assessment—can vary greatly between collaborative tasks and, in particular, from human to human. We see this variation even when restricting human-robot collaboration to shared workplaces such as those in a space station or a flexible manufacturing environment. This severely restricts the generality of calculations, as, in principle, human-specific data is required. It is still to be determined how this problem of person-specific characterization can be addressed. Potentially, a “calibration” phase may be required before operation starts, so that the robot co-worker can be customized to fit its human operator.

Just-in-Time Certification

The ISO TS 15066 is expected to be publicly released later this year. It promotes a task-specific approach to safety assurance that requires the hardware and software, the work environment and task specification to be available for hazard analysis and risk assessment in their final, fully finished forms prior to the deployment of the collaborative robotic system. Furthermore, it is based on the assumption that it is possible to predict as well as counter all hazardous operation conditions prior to system deployment. While this approach may be appropriate for task-specific pre-programmed robots, it appears to be inherently at odds with the concept of robots learning to perform new tasks “on the fly”, which leaves the task identification, definition, and training to the human operator, whose safety is of paramount importance. Hence, each newly learnt task as well as the learning process itself must be safety assured. For any learning from demonstration technique to become viable in the future, safety must be an integral part of the learning process. Task-specific safety assurance, consequently, may need to be performed at runtime, at least in part.

“Just-in-time” certification[7] is firmly based on the use of formal methods, at design time and at runtime. It takes advantage of the observation that traditional safety assessment is focused on checking whether the pre-defined system behaviour meets a set of pre-defined safety requirements. If the checking step could be automated, then its execution could reasonably be shifted to runtime. This necessitates encoding the safety requirements in a suitable form for runtime checking. At runtime, a monitor continuously checks compliance with these safety requirements, and prevents any behaviour that causes violations. While traditionally such monitors are generated at design time and applied at runtime, the use of Runtime Verification techniques enables the generation of such monitors at runtime. Learning from demonstration approaches could thus be constrained at runtime to deliver only learning results that are considered safe. This is one of the research topics under investigation at the Bristol Robotics Laboratory’s Verification and Validation for Safety in Robots research group.

Living with Robots

Robotic assistants will become an essential part of our lives in the near future, with applications in many areas from healthcare, assisted living, and education to manufacturing, rescue operations, and space missions. Demonstrable safety is a key prerequisite for us to build trust in this technology. Safety assurance for autonomous robotic assistants is a multi-disciplinary research area. The Safe and Trustworthy Autonomous Assistive Robots (STAARs) workshops at the University of Bristol have been set up to establish collaborations among experts from Engineering, Science, Social Sciences, and Law. The workshops seek to promote a holistic systems approach, one that informs engineering decisions during the design stage and thereby enables engineers to meet ethical, societal, and legal requirements as well as certification standards from the outset.

Acknowledgements

The Safe and Trustworthy Autonomous Assistive Robots (STAARs) initiative at the University of Bristol is funded by the Institute for Advanced Studies. The BRL team here was supported by EPSRC grants EP/K006320/1 and EP/K006223/1, part of the Trustworthy Robotic Assistants (http://www.robosafe.org ) project also involving the University of Hertfordshire (EP/K006509/1) and the University of Liverpool (EP/K006193/1).

References

[1] Bristol Robotics Laboratory, Bristol, UK. Verification and Validation for Safety in Robots Research Group

[2] Department of Computer Science, University of Bristol, Bristol, UK [www.bristol.ac.uk]

[3] Avian Technologies Ltd., UK

[4] Engineering, Design and Mathematics Department, University of the West of England, Bristol, UK [www.uwe.ac.uk]

[5] EPSRC, Principles of Robotics [www.epsrc.ac.uk]

[6] A. Avizienis, J.-C. Laprie, B. Randell, and C. Landwehr, “Basic concepts and taxonomy of dependable and secure computing,” IEEE Transactions on Dependable and Secure Computing, vol. 1, no. 1, pp. 11–33, Jan-Mar 2004.

[7] J. Rushby, “Just-in-time certification,” in 12th IEEE International Conference on the Engineering of Complex Computer Systems (ICECCS), 2007, pp. 15–24.

![A trajectory analysis that used a computational fluid dynamics approach to determine the likely position and velocity histories of the foam (Credits: NASA Ref [1] p61).](https://www.spacesafetymagazine.com/wp-content/uploads/2014/05/fluid-dynamics-trajectory-analysis-50x50.jpg)

Leave a Reply